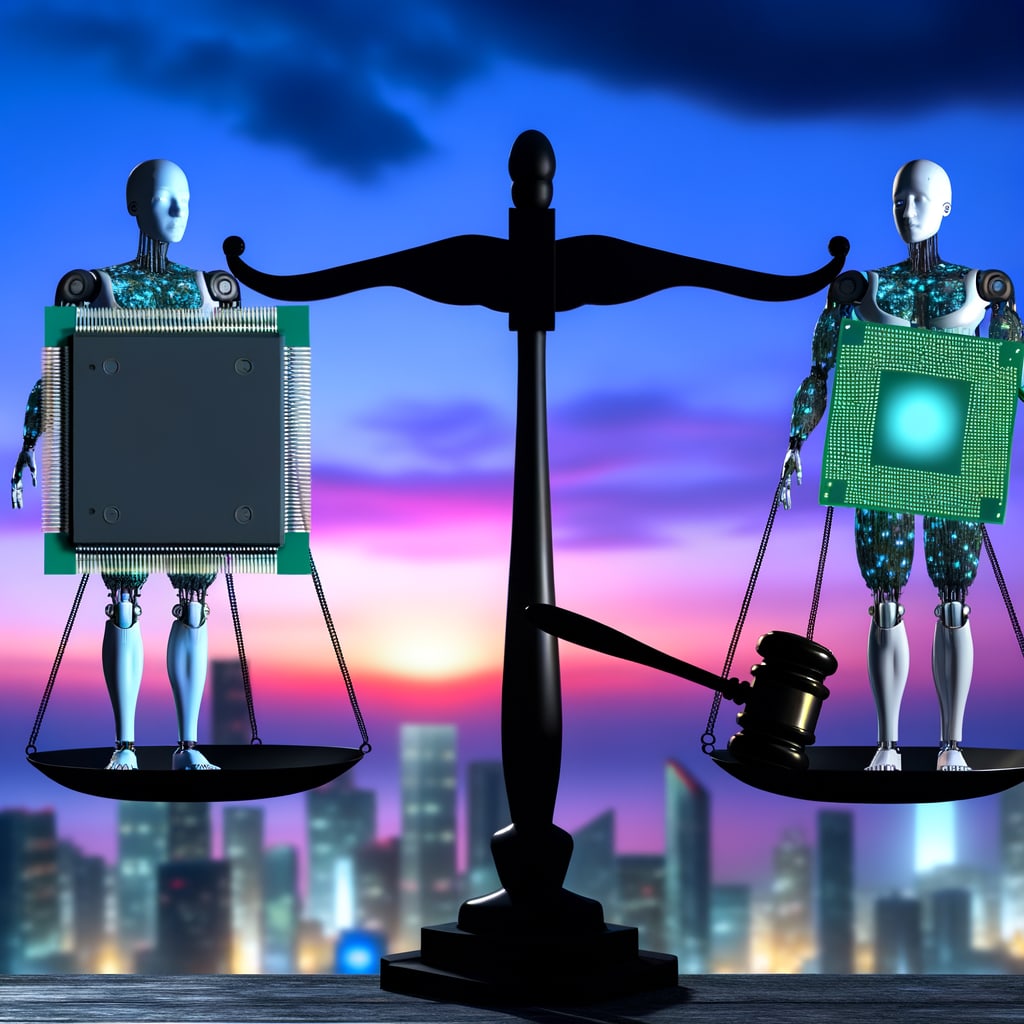

Anthropic, OpenAI Square off Over AI Regulation Amid Rampant Development Risks

In a contentious clash over artificial intelligence (AI) safety and regulation, OpenAI and Anthropic, two major players in the AI industry, have each backed well-funded political groups that will face off in the US midterm elections. This comes amid warnings from industry experts about the potential misuse of AI in grave crimes, including chemical weapons development.

Background and Context

Anthropic, a global AI leader, recently raised $30 billion in its latest funding round, valuing the company at a staggering $380 billion. This is more than double its valuation from just five months prior, reflecting the breakneck pace of AI investments globally. However, this rapid advancement in AI tools has not been without its critics.

A former head of security at Anthropic resigned, issuing a warning about the potential disadvantages that the advancement in AI tools may pose for humanity. Similarly, Mrinank Sharma, an Oxford graduate who led Anthropic's Safeguards Research Team, resigned with a cryptic warning about interconnected crises,

announcing his plans to become invisible for a period of time.

Key Developments

In response to these concerns, Anthropic has injected $20 million into Public First Action, a political advocacy group that backs candidates favoring AI regulation. Anthropic's move places it in direct opposition to OpenAI, which has advocated for less stringent AI regulations. The donation marks the San Francisco-based AI lab's first known political intervention.

The companies building AI have a responsibility to help ensure the technology serves the public good, not just their own interests,

Anthropic said in a statement. We don't want to sit on the sidelines while these policies are developed.

Implications and Reactions

The escalating AI wars have moved from Silicon Valley boardrooms to television screens, with Anthropic making its Super Bowl debut with a high-stakes ad campaign directly challenging OpenAI. These developments come amid a global AI arms race, with tech giants globally preparing to unveil their flagship products.

The AI regulation issue has also reached the international stage, with Brazil's PGE (Procuradoria-Geral Eleitoral) suggesting stricter restrictions on the use of AI in election propaganda, deeming the current rules insufficient.

Meanwhile, Elon Musk has slammed Anthropic's AI models as misanthropic

and evil

in a scathing social media post. Musk's post highlights the growing controversy surrounding AI and its potential misuse.

Conclusion

The debate around AI governance continues to intensify, with key industry players taking opposing stances. As AI continues to advance at a rapid pace, the stakes for national security and public safety are rising. As a result, the need for comprehensive AI regulation that balances innovation with safety becomes increasingly critical.

While the debate continues, the world watches and waits, aware that the decisions made now will shape the future of AI and potentially, humanity itself. The stage is set for a showdown in the upcoming US midterm elections, with the outcome likely to have far-reaching implications for the future of AI regulation.